Введение в .NET Native

.NET Native — это технология компиляции и упаковки, которая компилирует приложения .NET в собственный код. Он использует оптимизирующий бэкэнд компилятора C ++ и устраняет необходимость компиляции JIT во время выполнения и любую зависимость от .NET Framework, установленного на целевой машине. Ранее известный как «Project N» , .NET Native в настоящее время находится в открытом предварительном просмотре, и в этом посте рассматриваются внутренние компоненты процесса компиляции и производительность исполняемого файла результирующего исполняемого файла.

В настоящее время .NET Native доступен только для приложений Магазина Windows C #, скомпилированных для x64 или ARM, поэтому приведенные ниже эксперименты основаны на простом приложении Windows Store, которое выполняет ряд тестов и сравнивает производительность .NET Native со стандартным JIT. компиляция и эквивалентный код C ++.

Важно отметить, что компиляция .NET Native будет в конечном итоге доступна как часть процесса отправки и установки в Магазине Windows (он же «Компилятор в облаке»). Разработчикам приложений не нужно создавать двоичные файлы .NET Native и распространять их. Однако для целей тестирования Microsoft предоставляет цепочку инструментов, которую вы можете запускать локально на своем компьютере разработчика и проверять, что результат компиляции работает правильно.

Установка и включение .NET Native

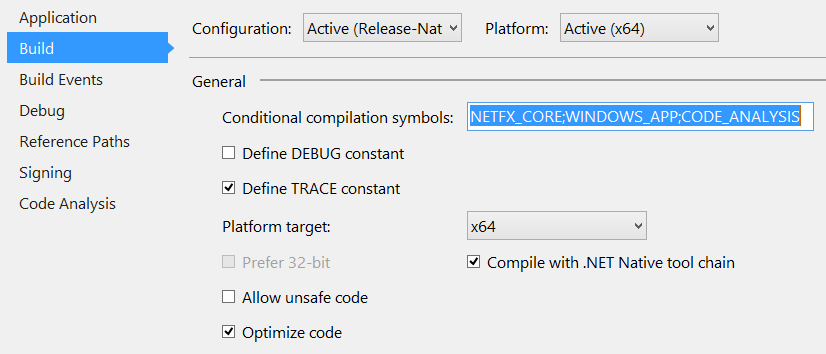

.NET Native is currently available in preview form as an extension to Visual Studio. After downloading and installing the .NET Native Developer Preview, you can right-click a Windows Store project in Visual Studio and choose “Enable for .NET Native”. This also runs a static analysis step that verifies you’re not using any features or APIs that are currently unsupported (more on this later). Then, to actually produce an application packaged using .NET Native, you need to check the “Compile with .NET Native tool chain” checkbox on your project’s Build properties pane.

Because the .NET Native tool chain is much slower (more than 20x slower on my benchmark app) than standard C# compilation, I recommend creating two separate project and solution configurations (I called them “Release” and “Release-Native”), and enabling the .NET Native tool chain on only one of them, which you wouldn’t use for every run.

Compilation Process

The .NET Native compilation process consists of multiple steps that perform a number of transformations from the original IL code to a packaged and optimized executable (or DLL). You can inspect the whole process by looking at the build output in Visual Studio, which is fairly detailed, or inspecting the process tree in Sysinternals Process Monitor.

Step 1: C# Compilation

First, your C# code is compiled to IL using the unmodified C# compiler. .NET Native is not involved in this step, and begins operation from the resulting IL.

Step 2: IL Transformations and Optimizations

The .NET Native compilation process begins with ilc.exe, which performs multiple transformations on the original IL and generates some additional code that will eventually be merged into the final application. The result of this step is an intermediate MyApp.ilexe file, which is still IL and can be inspected with any .NET decompiler. ilc.exe performs the following major steps:

Step 2a: MGC (Marshaling and Code Generation)

This step generates optimized interop calls and marshaling code for all the interop transitions performed by the application. The primary reasons for doing this are optimization and reducing the need for runtime marshaling stub generation. The result of this process for an app called MyApp is a file called MyApp.interop.g.cs. Here is a sample of what this file contains:

// StubClass for 'Windows.Globalization.ILanguageFactory'

internal static partial class __StubClass_Windows_Globalization__ILanguageFactory

{

[McgGeneratedMarshallingCode]

[MethodImpl(MethodImplOptions.AggressiveInlining)]

internal static IntPtr CreateLanguage(

__ComObject __this,

string languageTag)

{

IntPtr __ret = ForwardComInteropStubs.ReverseInteropShared_Func_string___IntPtr__(

_this,

languageTag,

McgCurrentModule.s_McgTypeInfoIndex___com_Windows_Globalization__ILanguageFactory,

McgNative.__vtable_Windows_Globalization__ILanguageFactory.idx_CreateLanguage

);

DebugAnnotations.PreviousCallContainsUserCode();

return __ret;

}

}

Step 2b: sg.exe (Serialization Assembly Generation)

This step inspects the serialization types used by the application and its dependencies and produces an assembly that contains serialization-related code and helpers. This is again necessary because .NET Native needs to avoid any dynamic code generation at runtime, which is often required by serializers. The tool chain recognizes multiple types of serialization, including data contract serialization, XML serialization, and JSON serialization.

Step 2c: ILMerge and “Tree Shaking”

In this step, all the application’s assemblies and intermediate generated code are merged together to a single IL binary. It’s important to note that all the application’s dependencies are merged at this point, including any types from the BCL itself. However, .NET Native does not merge types from the desktop BCL. Instead, it uses a special optimized version of the BCL (which already has the preceding optimizations and transformations applied). You can find it under C:\Program Files (x86)\MSBuild\Microsoft\.NetNative\x64\ilc\lib\IL, with corefx.dll replacing mscorlib.dll.

By merging only the types that are actually used by the application (a process colloquially known as “tree shaking” or dead dependency elimination), .NET Native achieves two important goals: 1) the working set is considerably reduced because there’s no need to load and touch BCL assemblies; 2) the resulting assembly no longer has a dependency on the .NET Framework.

Step 2d: Additional optimizations and transformation

There are some additional transformations applied during this step. I am not aware of all the details, but one example given in the //build video by .NET Native developers is adding explicit Invoke() methods to delegate types. In the desktop CLR, delegate types are augmented with an Invoke() method at runtime, automagically, whereas .NET Native requires the Invoke() method to be generated pre-runtime. For example, here is the generated Predicate<T>.Invoke method:

// System.Predicate<T>

[MetadataTransformed(MetadataTransformation.OriginallyVirtual), DebuggerStepThrough]

public bool Invoke(T obj)

{

return calli(System.Boolean(T), this.m_firstParameter, obj, this.m_functionPointer);

}

Another transformation example given in the //build video is transforming multi-dimensional (a.k.a. rectangular) arrays to one-dimensional array accesses. However, as far as I can tell, the current preview release does not perform this transformation because multi-dimensional array performance is much worse than one-dimensional arrays and also much poorer than how the desktop CLR and JIT treat multi-dimensional arrays.

A third example of a transformation is adding Equals and GetHashCode methods for custom value types that haven’t overriden them. Typically, these methods are provided by System.ValueType, and rely on Reflection to access the object’s fields. This is slower than a custom approach, and also requires runtime Reflection which .NET Native tries to avoid.

Step 3: Compiling to MDIL

At the end of the ilc.exe invocation, we have an intermediate transformed file, MyApp.ilexe. It is this file that is then passed to nutc_driver.exe, which is the Microsoft MDIL Compiler that contains the C++ backend. It is this step that should provide the largest runtime performance gains (as opposed to startup time gains) thanks to the C++ optimizing compiler.

The result of this process is a MyApp.mdilexe file, which no longer contains IL code. MDIL — Machine Dependent Intermediate Language — is a light abstraction on top of native binary code. MDIL has a set of special tokens which are placeholders that must be replaced prior to actual execution (this replacement process is called binding, and is described next).

MDIL was originally introduced with Triton (“Compiler in the Cloud”), which is a service that precompiles Windows Phone apps to MDIL prior to deployment to client devices. At install time, the MDIL code is bound to the target platform APIs and a native binary is emitted. The original purpose of using MDIL is to allow easier servicing of Windows Phone applications after they are deployed. For example, when the BCL classes used by Windows Phone apps are updated, the MDIL can be re-bound to the new version by replacing placeholder tokens and emitting a new native binary.

This raises the question of why MDIL is necessary for .NET Native. As I described above, the resulting binary is not dependent on the version of the desktop .NET Framework installed on client devices. It then seems natural that there are no servicing concerns in this case, and that direct native compilation could be employed. I can speculate that the reason for using MDIL is to take advantage of an existing tool chain that produces a native binary in two steps — IL to MDIL to native. Additionally, this might afford better flexibility in the future, if the distribution chain changes such that the final MDIL to native compilation occurs on the client devices.

Step 4: Native Binding

The final step of .NET Native compilation takes MDIL binaries and binds them to a native binary that can run on target devices. rhbind.exe is the component that performs this binding step. The result is a native executable, MyApp.exe.

The Minimal Runtime

Applications compiled with .NET Native do not have a dependency on the .NET Framework or the JIT, but they still require a set of runtime services. Notably, the garbage collector is still present. This minimal set of runtime services is called MRT, and you can see it in your project’s output directory as mrt100_app.dll.

This custom runtime also ships with a custom SOS and DAC, which you can find in C:\Program Files (x86)\MSBuild\Microsoft\.NetNative\x64 (mrt100sos.dll and mrt100dac_winamd64.dll). This is a different version of SOS that has a limited set of commands, but the useful basics are still present, including !GCRoot, !DumpHeap, !PrintException, and others.

.NET Native vs. NGen vs. Triton (“Compiler in the Cloud”)

There are now multiple frameworks that compile .NET applications to native code. It’s important to understand the differences between them. First, NGen (Native Image Generator) is still available to all .NET apps, not only Windows Store apps. NGen compiles IL to native code but does not preclude the option of using the JIT at runtime if necessary, as a fallback. It is the most flexible solution in terms of what you can do at runtime, but it does not perform any super-smart optimizations that the JIT doesn’t know about. Still, NGen can reduce application startup times in many cases.

Next, Triton (“Compiler in the Cloud”) is a technology similar to .NET Native which currently supports Windows Phone applications. I described how Triton works earlier, and the key difference compared to .NET Native is that the final binding step which produces a native binary is performed on the client’s device. Also, as far as I can tell, runtime JIT is not precluded.

Finally, .NET Native is the most restrictive tool of the three (more on the restrictions coming up). However, it uses (or is supposed to use) the C++ optimizing compiler, which produces higher quality code, and removes any unnecessary dependencies from the final binary, reducing memory usage and startup times.

Runtime Restrictions and .rd.xml Hint Files

In the preceding sections I alluded to the fact that there are some unsupported APIs or language features as far as .NET Native is concerned. Because .NET Native doesn’t ever use JIT compilation at runtime, some dynamic code generation and Reflection features are not available by default. Here are some examples:

- Reflecting over types (e.g. enumerating properties, methods)

- Using Reflection to invoke methods, set properties, etc.

- Dynamically emitting IL at runtime (e.g. for serialization purposes)

- Creating a dynamic closed generic type, for example:

typeof(List<>).MakeGenericType(new[]{typeof(int)})

.NET Native ships with a static analysis tool that you can run from Visual Studio or from the command line, called Gatekeeper.exe. This tool produces a nice HTML report that indicates whether your code uses any suspicious APIs or language features which might make it impossible to precompile all the required dependencies. If you attempt to use any of these features at runtime, you might get exceptions such as MissingMethodException or MissingMetadataException to indicate that this is the case.

However, it is definitely possible to tune exactly what is and isn’t included in the final native binary. The .NET Native tool chain relies on .rd.xml hint files (and there’s a default one added for you when you enable .NET Native on a Windows Store app project) that contain special directives such as “include metadata for this type” or “allow Reflection invoke on that type”. The full set of runtime directives and a detailed documentation are available on MSDN, as well as a list of scenarios and guidance on these directives. For example, if you use XAML data binding to view models, you will need to make sure that your view model classes are enabled for Reflection invocation at runtime.

Runtime Performance

In this section I would like to evaluate the runtime performance of .NET Native. One of the key performance gains from .NET Native is supposed to be startup time reduction, and this was well-demonstrated using the Fresh Paint application when “Project N” was initially announced, and there are some pre-selected apps in the Windows Store that already use .NET Native optimizations. What I would like to look at is how the optimizing compiler fares compared to the desktop JIT and compared to the standard Visual C++ 2013 compiler with all optimizations enabled.

For that purpose, I developed a set of small benchmarks. The full code is available here. The test machine has an Intel Core i7-3720QM (Sandy Bridge) CPU with Windows 8.1 Pro installed. The C++ compiler used is Visual C++ 2013 Update 2 RC.

AddVectors — for two arrays a and b, performs a[i] += b[i] for each element. There is an optimization opportunity here with respect to array bounds check elimination (if a.Length <= b.Length), which the desktop JIT performs, and also an opportunity for automatic vectorization, which the C++ compiler performs.

Desktop JIT – 1.0683ms

.NET Native – 1.07185ms

C++ – 0.58545ms (the loop was vectorized successfully with 128-bit SIMD instructions)

AddScalarToVector — for an array a, performs a[i] += 17 for each element. The same optimization opportunities apply.

Desktop JIT – 0.67816ms

.NET Native – 0.69931ms

C++ – 0.28991ms (the loop was vectorized successfully)

InterfaceDevirtualization – calls a method through an interface reference. There is an optimization opportunity here (which the desktop JIT employs) which can eliminate the interface call and replace it with a direct method call.

Desktop JIT – 2.07966ms

.NET Native – 3.33406ms

AbstractClassDevirtualization – calls a virtual method through an abstract base class reference. The same optimization opportunity applies.

Desktop JIT – 1.33005ms

.NET Native – 2.38919ms

Memcpy — Naively copies bytes from one byte[] to another, in a simple for loop. There is an optimization opportunity here that can recognize this memcpy operation and replace it with a native call to memcpy (the C++ optimizing compiler does this).

Desktop JIT – 0.67651ms

.NET Native – 0.63342ms

C++ – 0.38526ms

MulDivShifts — Performs a large number of multiplications by 3 and divisions by 4. There is an optimization opportunity here that can replace the expensive MUL and DIV instructions with bit shifts or LEA instructions (for example, Visual C++ compiles x *= 3 to 1. MOV EAX, x 2. LEA EAX, DWORD PTR [EAX+2*EAX] 3. MOV x, EAX).

Desktop JIT MulDivShifts – 4.47909ms

.NET Native MulDivShifts – 4.49315ms

C++ MulDivShifts – 4.4928ms

MatrixMultiplication — Performs a naive O(n^3) multiplication of two integer matrices. There are two versions of this code included — for rectangular (multi-dimensional) and jagged arrays. A good optimizing compiler can perform multiple optimizations here, such as replacing loop orders, vectorization, FMA, etc.

Desktop JIT RectangularMatrixMultiplication – 50.30814ms

.NET Native RectangularMatrixMultiplication – 187.3474ms

Desktop JIT JaggedMatrixMultiplication – 33.11678ms

.NET Native JaggedMatrixMultiplication – 33.08566ms

C++ MatrixMultiplication – 21.11234ms

Mandelbrot – Calculates the Mandelbrot set within given dimensions. This is a benchmark that doesn’t access lots of memory but runs a tight loop with multiplications and additions that can benefit from vectorization, smart register allocation, instruction reordering, and other considerations that an optimizing compiler employs. Of all the benchmarks above, this is probably the most realistic one in terms of code quantity and quality.

Desktop JIT Mandelbrot – 32.18214ms

.NET Native Mandelbrot – 46.48744ms

C++ Mandelbrot – 15.26277ms

The results above are pretty conclusive. At this point, .NET Native does not employ any super-smart compiler optimizations that bring its performance up to par with C++ code. In a few cases, it even produces code that is sub-par to the desktop JIT (notably, the rectangular arrays and Mandelbrot benchmarks). It’s important to remember that this is still a preview, and I will be very happy to repeat these measurements when updated version of .NET Native are released. I know the .NET Native team is working hard on compiler optimizations.

Mandelbrot Case Study

Of the benchmarks above, I had a particular interest in the Mandelbrot benchmark (among other reasons, my wife uses this as an interview programming assignment at her workplace). The C++ compiler did a really great job there compared to the desktop JIT and the .NET Native tool chain, and I asked the .NET Native team to try to see why. Ian Bearman from the .NET Native team has done the tedious performance analysis and reached the conclusion that the .NET Native version introduces a store-to-load forwarding hazard when accessing a local variable in the tighest loop, because it mixes 16-byte and 8-byte memory accesses in a way that precludes store-to-load forwarding on many CPUs. (For example, if there are two 8-byte stores to a 16-byte local variable followed by a single 16-byte load, most CPUs wouldn’t be able to forward that store from the local store buffer and will require a stall until the buffer is flushed to cache.)

Although this particular hazard is likely to be fixed in a future release of .NET Native, I was left wondering why there is even a need for memory accesses in the tighest part of the loop. Here is what it looks like:

Complex c = new Complex(xpos, ypos);

Complex z = new Complex(0.0, 0.0);

int i = 0;

for (; i < MandelbrotIterationsUntilEscape; ++i)

{

if (z.MagnitudeSquared > MandelbrotEscapeThresholdSquared)

break;

z = z*z + c;

}

There are three local variables here (i, z, and c), and it seemed to me that they can all be stored in registers. Surprisingly, even the Visual C++ compiler does not store them all in registers, and requires stack (memory) accesses to z. Then, I tested the same code with Clang. Sure enough, Clang’s running time for the same loop was 8.61277ms on average, which is almost 2x faster than Visual C++. After inspecting Clang’s generated assembly code, I was able to verify that the tight loop variables are all stored in registers, and the only memory access is a read for the MandelbrotEscapeThresholdSquared constant. Take a look:

LBB0_3: vmulsdXMM2, XMM7, XMM7 vmulsdXMM3, XMM0, XMM0 vaddsdXMM4, XMM3, XMM2 vucomisdXMM4, QWORD PTR [RIP + LCPI0_3] jaLBB0_5 vsubsdXMM2, XMM2, XMM3 vaddsdXMM2, XMM6, XMM2 vmulsdXMM0, XMM0, XMM7 vaddsdXMM0, XMM0, XMM0 vaddsdXMM0, XMM5, XMM0 incEDX cmpEDX, 1000 vmovapsXMM7, XMM2 jlLBB0_3 LBB0_5:

Note that even though the preceding assembly listing uses AVX vector (SIMD) instructions, they are not operating on real vectors. VMULSD, VSUBSD, VADDSD, VUCOMISD — these are all instructions that use only the low 64-bits of the XMM 128-bit registers. It is definitely possible to vectorize the Mandelbrot algorithm with very good results, and in fact the Microsoft.Bcl.Simd samples I blogged about last week cover one approach to its vectorization.

Summary

.NET Native is an exciting new technology that has the potential of considerably reducing application startup time and memory usage, and bringing C# code performance up to par with C++ code by using the C++ optimizing backend. At this time, however, these optimizations aren’t quite there.

The internal details about the .NET Native compilation process are based on the //build video by Mani Ramaswamy and Shawn Farkas and my own reverse engineering work. Any inaccuracies and errors are my own, and I welcome feedback that can improve this article. Thanks for reading.