Если вы не хотите иметь специальное оборудование для быстрой настройки и исследования, виртуальная машина будет правильным выбором.

Настройка виртуальной машины

Шаг 1. Загрузите проигрыватель виртуальной машины.

Вы можете скачать VM Player бесплатно здесь .

Идите вперед и установите на свой хост ОС.

Шаг 2. Загрузите виртуальную машину

Вы можете скачать легальное изображение CentOs6 здесь .

Вы даже можете загрузить виртуальную машину Cloudera Quick Start, предназначенную для больших объемов данных.

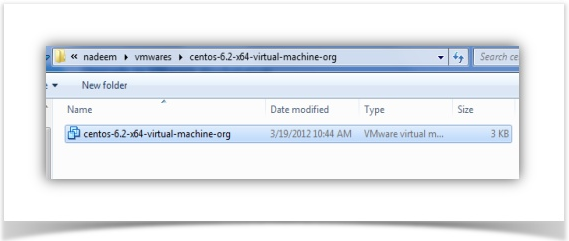

После загрузки распакуйте его в подходящее место в вашей операционной системе.

Шаг 3: Запустите виртуальную машину

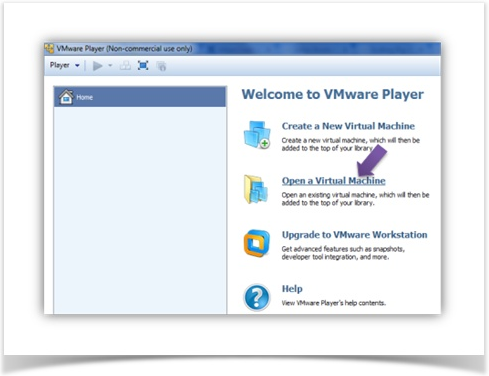

Нажмите «Открыть виртуальную машину». Выберите тот, который вы уже извлекли

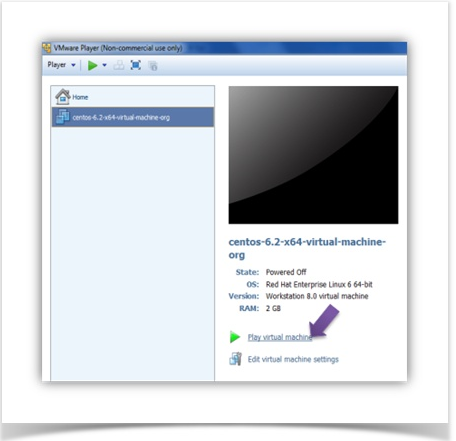

Нажмите «Играть на виртуальной машине»

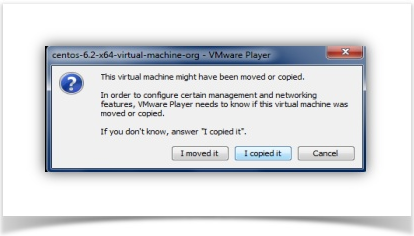

Возможно, вам придется помочь VM Player, когда появится сообщение «Я скопировал»

Войти с паролем «Томтом»

Успешный вход в систему покажет экран рабочего стола

Настройка Java

Шаг 1: Убедитесь, что система CentOS полностью обновлена

Станьте суперпользователем и запустите yum update

[tom@localhost ~]$ su

Password:

[root@localhost tom]# yum update

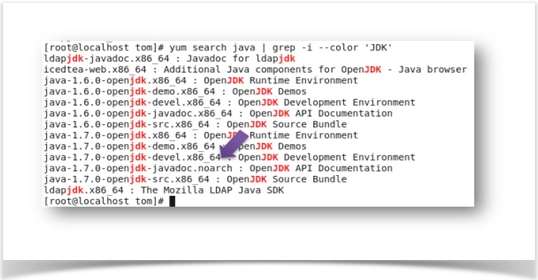

Шаг 2: Установите JDK

[root@localhost tom]# yum search java | grep -i --color 'JDK'

В Windows это процесс установки в один клик

[root@localhost tom]# yum install java-1.7.0-openjdk-devel.x86_64

[root@localhost tom]# javac -version

javac 1.7.0_55

В CentOS6 JDK устанавливается в следующую папку

[root@localhost java-1.7.0-openjdk-1.7.0.55.x86_64]# ll /usr/lib/jvm

total 4

lrwxrwxrwx. 1 root root 26 Jun 20 10:29 java -> /etc/alternatives/java_sdk

lrwxrwxrwx. 1 root root 32 Jun 20 10:29 java-1.7.0 -> /etc/alternatives/java_sdk_1.7.0

drwxr-xr-x. 7 root root 4096 Jun 20 10:29 java-1.7.0-openjdk-1.7.0.55.x86_64

lrwxrwxrwx. 1 root root 34 Jun 20 10:29 java-1.7.0-openjdk.x86_64 -> java-1.7.0-openjdk-1.7.0.55.x86_64

lrwxrwxrwx. 1 root root 34 Jun 20 10:29 java-openjdk -> /etc/alternatives/java_sdk_openjdk

lrwxrwxrwx. 1 root root 21 Jun 20 10:29 jre -> /etc/alternatives/jre

lrwxrwxrwx. 1 root root 27 Jun 20 10:29 jre-1.7.0 -> /etc/alternatives/jre_1.7.0

lrwxrwxrwx. 1 root root 38 Jun 20 10:29 jre-1.7.0-openjdk.x86_64 -> java-1.7.0-openjdk-1.7.0.55.x86_64/jre

lrwxrwxrwx. 1 root root 29 Jun 20 10:29 jre-openjdk -> /etc/alternatives/jre_openjdk

<pre>Настройка Hadoop

Шаг 3: Создайте нового пользователя для запуска hadoop

[root@localhost ~]# useradd hadoop

[root@localhost ~]# passwd hadoop

Changing password for user hadoop.

New password:

Retype new password:

passwd: all authentication tokens updated successfully.

[root@localhost ~]#

Шаг 4: Загрузите Hadoop

[tom@localhost ~]$ su hadoop

Password:

[hadoop@localhost ~]$

[hadoop@localhost Downloads]$ wget 'http://apache.arvixe.com/hadoop/common/hadoop-2.2.0/hadoop-2.2.0.tar.gz'

[hadoop@localhost Downloads]$ tar -xvf hadoop-2.2.0.tar.gz

На данный момент мы можем поместить hadoop в папку / usr / share / hadoop

</pre>

[root@localhost Downloads]# mkdir /usr/share/hadoop

[root@localhost Downloads]# mv hadoop-2.2.0 /usr/share/hadoop/2.2.0

<pre>Шаг 5: Смена владельца

[root@localhost Downloads]# cd /usr/share/hadoop/2.2.0/

[root@localhost share]# chown -R hadoop hadoop

[root@localhost share]# chgrp -R hadoop hadoop

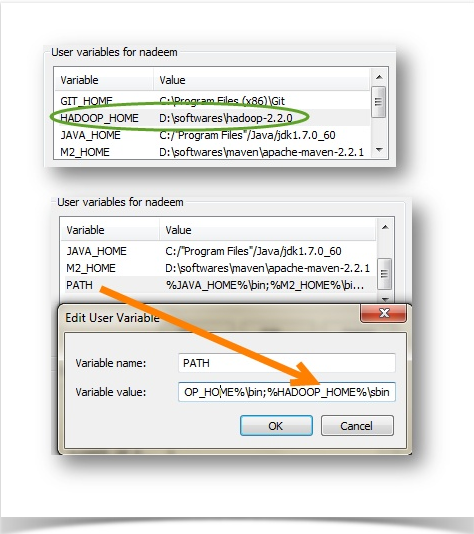

Step 6: Update Environment Variables

[hadoop@localhost ~]$ vi ~/.bash_profile

JAVA_HOME=/usr/lib/jvm/java-1.7.0-openjdk-1.7.0.55.x86_64

HADOOP_HOME=/usr/share/hadoop/2.2.0

export JAVA_HOME

export HADOOP_HOME

export HADOOP_MAPRED_HOME=${HADOOP_HOME}

export HADOOP_COMMON_HOME=${HADOOP_HOME}

export HADOOP_HDFS_HOME=${HADOOP_HOME}

export YARN_HOME=${HADOOP_HOME}

PATH=$PATH:$HOME/bin:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export PATH

Things are all easy in Windows

[hadoop@localhost Downloads]$ source ~/.bash_profile

[hadoop@localhost ~]$ vi /usr/share/hadoop/2.2.0/etc/hadoop/hadoop-env.sh

# The java implementation to use.

#export JAVA_HOME=${JAVA_HOME}

export JAVA_HOME=/usr/lib/jvm/java-1.7.0-openjdk-1.7.0.55.x86_64

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

Step 7: Test Hadoop

[hadoop@localhost Downloads]$ hadoop version

Hadoop 2.2.0

Subversion https://svn.apache.org/repos/asf/hadoop/common -r 1529768

Compiled by hortonmu on 2013-10-07T06:28Z

Compiled with protoc 2.5.0

From source with checksum 79e53ce7994d1628b240f09af91e1af4

This command was run using /usr/share/hadoop/2.2.0/share/hadoop/common/hadoop-common-2.2.0.jar

Step 8: Fix SSH Issues

If you are getting SSH related issues while starting dfs (name node,data node or yarn), it could be that SSH is not installed or running.

Issue#1:

<h4></h4>

<h4>[hadoop@localhost ~]$ start-dfs.sh</h4>

VM: ssh: Could not resolve hostname VM: Name or service not known

Issue#2:

<h5>[hadoop@localhost Downloads]$ ssh localhost</h5>

ssh: connect to host localhost port 22: Connection refused

Solution

Install SSH server/client

[root@localhost Downloads]$ yum -y install openssh-server openssh-clients

Enable SSH

[root@localhost Downloads] chkconfig sshd on

[root@localhost Downloads] service sshd start

Generating SSH1 RSA host key: [ OK ]

Generating SSH2 RSA host key: [ OK ]

Generating SSH2 DSA host key: [ OK ]

Starting sshd: [ OK ]

[root@localhost Downloads]#

Make sure port 22 is open

[root@localhost Downloads]# netstat -tulpn | grep :22

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 8662/sshd

tcp 0 0 :::22 :::* LISTEN 8662/sshd

Create empty phrase SSH keys so that you don’t have to enter password manually, while hadoop works

[hadoop@localhost ~]$ ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

Generating public/private dsa key pair.

Your identification has been saved in /home/hadoop/.ssh/id_dsa.

Your public key has been saved in /home/hadoop/.ssh/id_dsa.pub.

The key fingerprint is:

08:d6:c1:66:2c:c8:c5:7b:96:d8:cb:fc:8d:19:16:38 hadoop@localhost.localdomain

The key's randomart image is:

+--[ DSA 1024]----+

| . +.o. |

| o o.=. |

| oB.o |

| .o.E.. |

| =.oS. |

| + o |

| o = |

| + . |

| |

+-----------------+

[hadoop@localhost ~]$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

[hadoop@localhost ~]$ chmod 644 ~/.ssh/authorized_keys

[hadoop@localhost ~]$

Verify that doing SSH does not prompt for password

[hadoop@localhost ~]$ ssh localhost

Last login: Fri Jun 20 15:08:09 2014 from localhost.localdomain

[hadoop@localhost ~]$

Start all Components

[hadoop@localhost Desktop]$ start-all.sh

[hadoop@localhost Desktop]$ jps

3542 NodeManager

3447 ResourceManager

3576 Jps

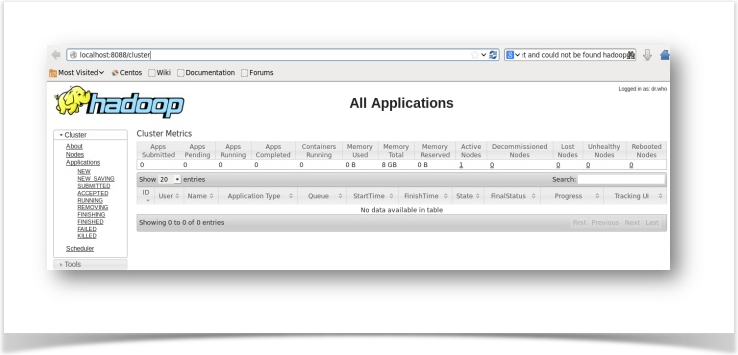

Hadoop Cluster

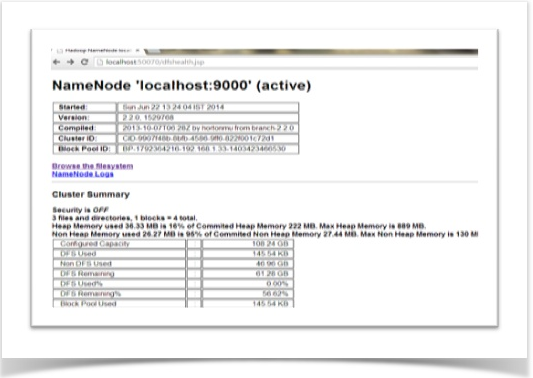

HDFS

Running Hadoop in Pseudo-Distributed mode

Step 1: Update core-site.xml

Add the following to core-site.xml

[hadoop@localhost ~]$ vi $HADOOP_HOME/etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

<final>true</final>

</property>

</configuration>

Step 2: Update hdfs-site.xml

Add the following to hdfs-site.xml

[hadoop@localhost ~]$ vi $HADOOP_HOME/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/hadoop/dfs/name</value>

<final>true</final>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/hadoop/dfs/data</value>

<final>true</final>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

<pre>Step 3: Update mapred-site.xml

Add the following to mapred-site.xml

</pre>

[hadoop@localhost ~]$ vi $HADOOP_HOME/etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapred.system.dir</name>

<value>file:/home/hadoop/mapred/system</value>

<final>true</final>

</property>

<property>

<name>mapred.local.dir</name>

<value>file:/home/hadoop/mapred/local</value>

<final>true</final>

</property>

<property>

<name>mapred.job.tracker</name>

<value>localhost:8021</value>

</property>

</configuration>

Step 3: Update yarn-site.xml

Add the following to yarn-site.xml

[hadoop@localhost ~]$ vi $HADOOP_HOME/etc/hadoop/yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>localhost:8032</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>

Step 4: Name node format

[hadoop@localhost ~]$ hdfs namenode -format

14/06/20 21:19:34 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = localhost.localdomain/127.0.0.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.2.0

STARTUP_MSG: classpath = /usr/share/hadoop/2.2.0/etc/hadoop:/usr/share/hadoop/2.2.0/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/share/hadoop/2.2.0/share/hadoop/common/lib/hadoop-auth-2.2.0.jar *****

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common -r 1529768; compiled by 'hortonmu' on 2013-10-07T06:28Z

STARTUP_MSG: java = 1.7.0_55

************************************************************/

14/06/20 21:19:34 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

14/06/20 21:19:35 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Formatting using clusterid: CID-d9351768-346c-43e4-b0f2-5a182a12ec4a

14/06/20 21:19:36 INFO namenode.HostFileManager: read includes:

HostSet(

)

14/06/20 21:19:36 INFO namenode.HostFileManager: read excludes:

HostSet(

)

14/06/20 21:19:36 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

14/06/20 21:19:36 INFO util.GSet: Computing capacity for map BlocksMap

14/06/20 21:19:36 INFO util.GSet: VM type = 64-bit

14/06/20 21:19:36 INFO util.GSet: 2.0% max memory = 966.7 MB

14/06/20 21:19:36 INFO util.GSet: capacity = 2^21 = 2097152 entries

14/06/20 21:19:36 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

14/06/20 21:19:36 INFO blockmanagement.BlockManager: defaultReplication = 3

14/06/20 21:19:36 INFO blockmanagement.BlockManager: maxReplication = 512

14/06/20 21:19:36 INFO blockmanagement.BlockManager: minReplication = 1

14/06/20 21:19:36 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

14/06/20 21:19:36 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false

14/06/20 21:19:36 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

14/06/20 21:19:36 INFO blockmanagement.BlockManager: encryptDataTransfer = false

14/06/20 21:19:36 INFO namenode.FSNamesystem: fsOwner = hadoop (auth:SIMPLE)

14/06/20 21:19:36 INFO namenode.FSNamesystem: supergroup = supergroup

14/06/20 21:19:36 INFO namenode.FSNamesystem: isPermissionEnabled = false

14/06/20 21:19:36 INFO namenode.FSNamesystem: HA Enabled: false

14/06/20 21:19:36 INFO namenode.FSNamesystem: Append Enabled: true

14/06/20 21:19:36 INFO util.GSet: Computing capacity for map INodeMap

14/06/20 21:19:36 INFO util.GSet: VM type = 64-bit

14/06/20 21:19:36 INFO util.GSet: 1.0% max memory = 966.7 MB

14/06/20 21:19:36 INFO util.GSet: capacity = 2^20 = 1048576 entries

14/06/20 21:19:36 INFO namenode.NameNode: Caching file names occuring more than 10 times

14/06/20 21:19:36 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

14/06/20 21:19:36 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

14/06/20 21:19:36 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

14/06/20 21:19:36 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

14/06/20 21:19:36 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

14/06/20 21:19:36 INFO util.GSet: Computing capacity for map Namenode Retry Cache

14/06/20 21:19:36 INFO util.GSet: VM type = 64-bit

14/06/20 21:19:36 INFO util.GSet: 0.029999999329447746% max memory = 966.7 MB

14/06/20 21:19:36 INFO util.GSet: capacity = 2^15 = 32768 entries

14/06/20 21:19:37 INFO common.Storage: Storage directory /home/hadoop/dfs/name has been successfully formatted.

14/06/20 21:19:37 INFO namenode.FSImage: Saving image file /home/hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

14/06/20 21:19:37 INFO namenode.FSImage: Image file /home/hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 198 bytes saved in 0 seconds.

14/06/20 21:19:37 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

14/06/20 21:19:37 INFO util.ExitUtil: Exiting with status 0

14/06/20 21:19:37 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost.localdomain/127.0.0.1

************************************************************/

[hadoop@localhost ~]$

Step 5: Start Hadoop components

[hadoop@localhost ~]$ start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

14/06/20 21:22:54 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting namenodes on [localhost]

localhost: starting namenode, logging to /usr/share/hadoop/2.2.0/logs/hadoop-hadoop-namenode-localhost.localdomain.out

localhost: starting datanode, logging to /usr/share/hadoop/2.2.0/logs/hadoop-hadoop-datanode-localhost.localdomain.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /usr/share/hadoop/2.2.0/logs/hadoop-hadoop-secondarynamenode-localhost.localdomain.out

14/06/20 21:23:26 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

starting yarn daemons

resourcemanager running as process 3447. Stop it first.

localhost: nodemanager running as process 3542. Stop it first.

[hadoop@localhost ~]$ jps

3542 NodeManager

5113 DataNode

5481 Jps

5286 SecondaryNameNode

5016 NameNode

3447 ResourceManager

Step 5: Stop Hadoop components

[hadoop@localhost ~]$ stop-all.sh

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

14/06/20 21:27:55 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Stopping namenodes on [localhost]

localhost: stopping namenode

localhost: stopping datanode

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: stopping secondarynamenode

14/06/20 21:28:18 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

stopping yarn daemons

stopping resourcemanager

localhost: stopping nodemanager

no proxyserver to stop

Windows Issues

Issue #1: JAVA_HOME related issue

Issue #2: Failed to locate winutils binary

Solution : Download this and copy hadoop-common-2.2.0/bin folder and paste them to HADOOP_HOME/bin folder. Alternatively you can build hadoop for your own environment as described here.

Issue #3: “The system cannot find the batch label specified”

Solution :Download unix2dos from here and run on all the hadoop batch files.