Недавно мой коллега (по Перконе) Ив Трюдо и коллега (по отраслям) Марко Туса

опубликовали некоторые материалы об Amazon Aurora. Действительно, Amazon Aurora в наши дни является горячей темой, и у нас есть множество запросов клиентов относительно этой технологии. Я решил составить собственное мнение, и нет ничего лучше, чем личный, практический опыт, которым я собираюсь поделиться.

Материалы, на которые я буду ссылаться:

- [1] Презентация от Amazon Re: Invent: https://www.youtube.com/watch?v=CwWFrZGMDds

- [2] Слайды, использованные в презентации выше: http://www.slideshare.net/AmazonWebServices/amazon-aurora

- [3] Технический документ Amazon с тестами Sysbench: https://d0.awsstatic.com/product-marketing/Aurora/RDS_Aurora_Performance_Assessment_Benchmarking_v1-2.pdf

Презентация [1] дает хороший общий начальный обзор. Тем не менее, есть одно заявление, которое сделал докладчик, и я бы хотел выделить «Amazon Aurora — это MySQL-совместимый механизм реляционных баз данных, который сочетает в себе скорость и доступность высокопроизводительных коммерческих баз данных с простотой и экономичностью баз данных с открытым исходным кодом ».

Это не означает, что Amazon Aurora — это база данных с открытым исходным кодом, но, безусловно, цель состоит в том, чтобы Amazon выглядел сопоставимым с открытым исходным кодом.

I would like to make it clear that Amazon Aurora is not open source. Amazon Aurora is based on the Open Source MySQL Edition, but is not open source by itself; it is a proprietary, closed-source database.

By choosing Amazon Aurora you are fully dependent on Amazon for bug fixes or upgrades. In this regard you are locked-in to Amazon RDS. Though it is not the same lock-in as to a commercial database like Oracle or MS-SQL. You should still be able to relatively easily migrate your application to a community MySQL.

Amazon uses a GPLv2 hole, which allows it to not publish source code in a cloud model. How is Amazon Aurora different from Percona Server or MariaDB? Both of these projects are required to publish their sources. It comes to the distribution model. GPLv2 makes a restriction on a traditional distribution model: if you download software or receive a hard copy of software binaries, you also have rights to request corresponding source code. This is not the case with cloud computing: there you do not download anything, just launch an instance. GPLv2 does not make any restrictions for this case, so Amazon is in compliance with GPLv2.

Bug Fixes

Speaking of bug fixes, Amazon Aurora exposes itself as “version: 5.6.10, version_comment: MySQL Community Server (GPL)”. MySQL 5.6.10 was released on 2013-02-05. That was 2.5 year ago. It is not clear if Aurora includes 2.5 years worth of bug fixes and just did not update the version, or if this is really binaries based on a 2.5 year old code base.

For example, let’s take the MySQL bug http://bugs.mysql.com/bug.php?id=70577, which was fixed in 5.6.15. I do not see the bug fix present in Amazon Aurora 5.6.10.

Another bug, http://bugs.mysql.com/bug.php?id=68041, which was fixed in MySQL 5.6.13, but is still present in Amazon Aurora.

What about InnoDB’s code base? The bug, http://bugs.mysql.com/bug.php?id=72548, with InnoDB fulltext search, was fixed in MySQL 5.6.20 (released more than a year ago, on 2014-07-31) and is still present in Amazon Aurora.

This leaves me with the impression that the general Aurora codebase was not updated recently.

Although it seems Amazon changed the innodb_version. Right now it is 1.2.10. A couple of weeks ago it was innodb_version: 1.2.8

My question here, does Amazon have the ability to keep up with MySQL bug fixes and regularly update their software? So far it does not seem so.

Amazon Aurora Architecture

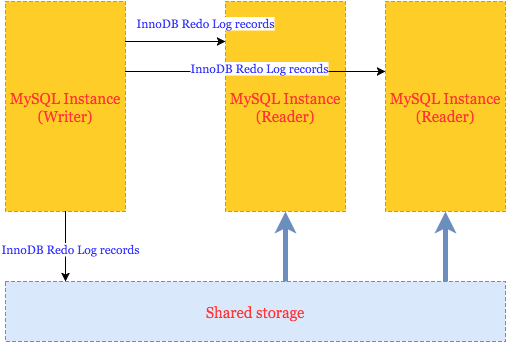

My understanding of Amazon Aurora in simplified form is the following:

That is, all Aurora instances share the same storage, and that makes it very easy to start new “Reader” instances over the same data.

Communication between Writer (only 1 Writer allowed) and Readers is done by transferring records similar to InnoDB redo log records. And this really limits how many Writers you can have (only one). I do not believe it is possible to implement a proper transactional coordinator between two Writers based on redo records.

A similar way is used to update data stored on shared storage: Aurora just applies redo log records to data.

So, updating data this way, Aurora is able to:

- Avoid data writes to storage

- Avoid binary logs

- Avoid InnoDB transactional logs

- Disable doublewrites

- Disable InnoDB checksums

Aurora makes claims about significant performance improvements, but we need to keep in mind that EACH WRITE goes directly to storage and it has to be acknowledged by 4 out of 6 copies (synchronous writes). Aurora Writer works in some kind of “write through” mode – this is needed, as I understand, to make Reader see changes immediately. I expect it also comes with a performance penalty, so whether the performance gain is bigger than the performance penalty will depend on the workload.

Now, I should give credit to the Amazon engineering team for a proper implementation of shipping and applying transactional redo logs. It must have required a lot of work to change the InnoDB engine, and as we see it took probably a year (from the announcement to the general availability) for Amazon to stabilize Aurora. Too bad Amazon keeps their changes closed, even when the main codebase is an open source database.

Work with Transactional Isolation

Distributed computing is especially complicated from a transactional processing point of view (see for example a story), so I also wanted to check how Amazon Aurora handles transactional isolation levels on different instances.

Fortunately for Aurora, they have an easy way out, allowing only read statements on Readers, but we still check isolation in some cases.

It seems that the only TRANSACTION ISOLATION level supported is REPEATABLE-READ. When I try to change to SERIALIZABLE or READ-COMMITTED, Aurora accepts this without an error, but silently ignores it. tx_isolation stays REPEATABLE-READ.

mysql> SET SESSION TRANSACTION ISOLATION LEVEL SERIALIZABLE;

Query OK, 0 rows affected (0.00 sec)

mysql> SELECT @@GLOBAL.tx_isolation, @@tx_isolation;

+-----------------------+-----------------+

| @@GLOBAL.tx_isolation | @@tx_isolation |

+-----------------------+-----------------+

| REPEATABLE-READ | REPEATABLE-READ |

+-----------------------+-----------------+

1 row in set (0.00 sec)

mysql> SET SESSION TRANSACTION ISOLATION LEVEL READ COMMITTED;

Query OK, 0 rows affected (0.00 sec)

mysql> SELECT @@GLOBAL.tx_isolation, @@tx_isolation;

+-----------------------+-----------------+

| @@GLOBAL.tx_isolation | @@tx_isolation |

+-----------------------+-----------------+

| REPEATABLE-READ | REPEATABLE-READ |

+-----------------------+-----------------+

1 row in set (0.00 sec)Actually, there I face another worrisome behaviour: silent changes in Aurora without notification.

I am pretty sure, that when a couple of weeks ago I tried to use SERIALIZABLE level, it failed with an error: “SERIALIZABLE is not supported”. Now it just silently ignores it. So I assume Amazon continues to make changes. I guess this is one of the changes from innodb_version 1.2.8 to 1.2.10. Is there a full Changelog we can see?

The lack of SERIALIZABLE level is not a big problem in my opinion. In the end, we know that Percona XtraDB Cluster does not support it either.

But not being able to use READ-COMMITTED might be an issue for some applications; you need to check if your application is working properly with READ-COMMITTED silently set as REPEATABLE-READ.

I found another unusual behaviour between the reader and writer when I tried to execute ALTER TABLE statement on the Writer (this is another hard area for clusters: to keep a data dictionary synchronized).

Scenario:

READER:

execute long SELECT col1 FROM tab1

WRITER:

while SELECT running, execute ALTER TABLE tab1 ADD COLUMN col2 ;

Effect: SELECT on READER fails immediately with an error: “ERROR 1866 (HY000): Query execution was interrupted on a read-only database because of a metadata change on the master”

So there again I think Aurora does its best given architectural limitations and one-directional communication: it just chooses to kill read statements on Readers.

Query Cache

I should highlight improvements to query_cache as a good enhancement. Query cache is enabled by default and Amazon fixed the major issue with MySQL query cache, which is when update queries may stall for a long time waiting on invalidation of query cache entries. This problem does not exist in Amazon Aurora. Also Amazon adjusts query_cache to work properly on Writer-Reader pair. Query_cache on the Reader gets invalidated when data is changed on Writer.

Config Review

Let’s make a quick review of the MySQL configuration that Aurora proposes.

These are variables which are set by default and you can’t change:

| innodb_change_buffering | none |

| innodb_checksum_algorithm | none |

| innodb_checksums | OFF |

| innodb_doublewrite | OFF |

| innodb_flush_log_at_trx_commit | 1 |Disabled doublewrite and checksums is not a surprise, I mentioned this above.

Also innodb_flush_log_at_trx_commit is strictly set to 1, I think it is also related to how Aurora deals with InnoDB redo log records.

Disabled innodb_change_buffering is also interesting, and it can’t be good for performance. I guess Amazon had to disable any buffering of updates so changes are immediately written to the shared storage.

Diagnostic

Traditionally RDS does not provide you with good access to system metrics like vmstat and iostat, and it makes troubleshooting quite challenging. MySQL slow-log is also not available, so it leaves us with only PERFORMANCE_SCHEMA (which is OFF by default)

One good thing is that we can access SHOW GLOBAL STATUS, and this can also be used for monitoring software.

Interesting status after a heavy write load, these counters always stay at 0:

| Innodb_buffer_pool_pages_dirty | 0 |

| Innodb_buffer_pool_bytes_dirty | 0 |

| Innodb_buffer_pool_pages_flushed | 0 |

| Innodb_pages_written | 0 |I think this supports my guess about the architecture, that Aurora does not keep any changed pages in memory and just directly writes everything to storage.

Final Thoughts

I will follow up this post with my benchmarks, as I expect the proposed architecture comes with serious performance penalties, although removing binary logs and eliminating doublewrites should show a positive improvement.

In general I think Amazon Aurora is a quite advanced proprietary version of MySQL. It is not revolutionary, however, and indeed not “reimagined relational databases” as Amazon presents it. This technology does not address a problem with scaling writes, sharding and does not handle cross-nodes transactions.

As for other shortcomings, I see there is no public bug database, no detailed user documentation and Aurora’s code is based on old MySQL source code.

Originally written by Vadim Tkachenko at Percona.